3D Sketch Map research meeting in Münster

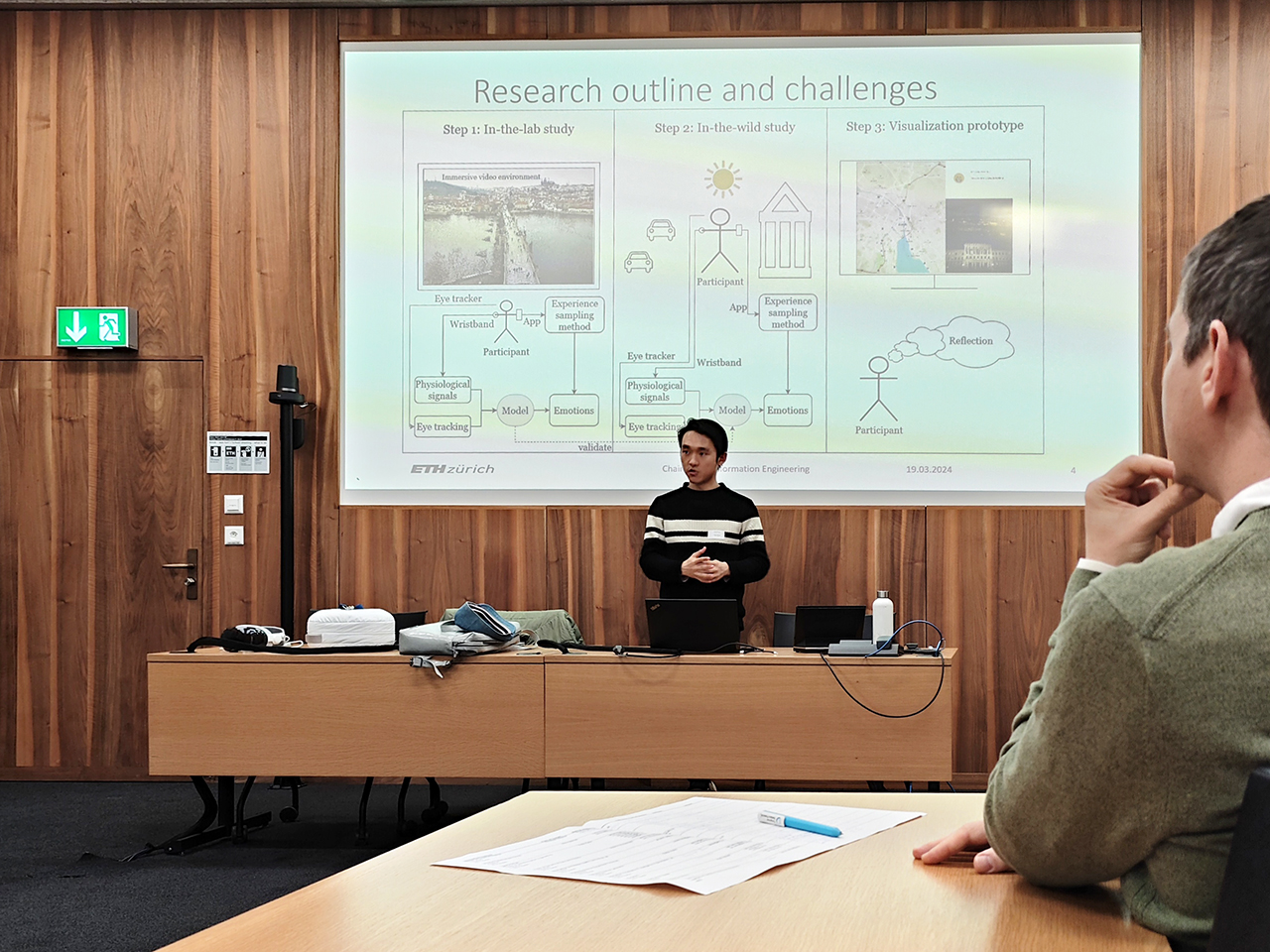

The 3D Sketch Map project team had a very productive research meeting in Münster, thanks to Prof. Jakub Krukar for the perfect organization in this wonderful city! In this project, we investigate 3D sketch maps from a theoretical, empirical, cognitive, as well as tool-related perspective, with a particular focus on Extended Reality (XR) technologies. Sketch mapping is an established research method in fields that study human spatial decision-making and information processing, such as navigation and wayfinding. Although space is naturally three-dimensional (3D), contemporary research has focused on assessing individuals’ spatial knowledge with two-dimensional (2D) sketches. For many domains though, such as aviation or the cognition of complex multilevel buildings, it is essential to study people’s 3D understanding of space, which is not possible with the current 2D methods. In the research meeting, we exchanged our latest research outputs and future plans.

Exciting news! The geoGAZElab will be participating in the MSCA Doctoral Network “Eyes for Interaction, Communication, and Understanding (

Exciting news! The geoGAZElab will be participating in the MSCA Doctoral Network “Eyes for Interaction, Communication, and Understanding (