We’ll present one full paper and two workshop position papers at CHI in Glasgow this year:

Workshop: Designing for Outdoor Play (4th May, Saturday – 08:00 – 14:00, Room: Alsh 1)

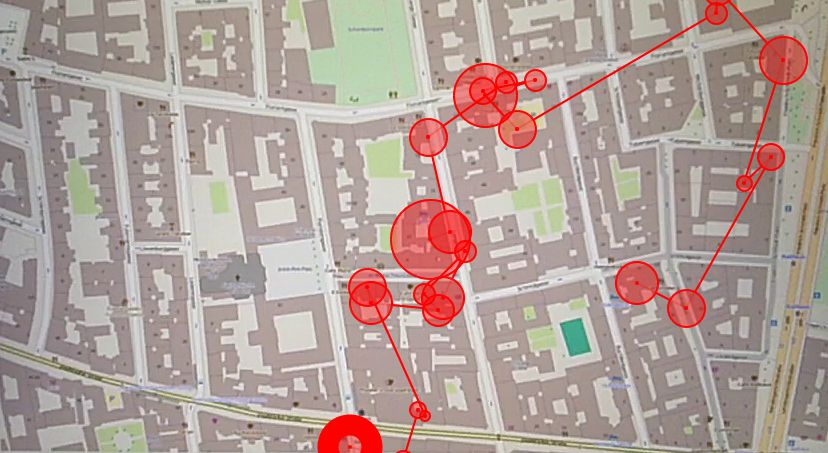

Kiefer, P.(2019) Gaze-guided narratives for location-based games. In CHI 2019 Workshop on “Designing for Outdoor Play”, Glasgow, U.K., DOI: https://doi.org/10.3929/ethz-b-000337913

Workshop: Challenges Using Head-Mounted Displays in Shared and Social Spaces (5th May, Sunday – 08:00 – 14:00, Room: Alsh 2)

Göbel, F., Kwok, T.C.K., and Rudi, D.(2019) Look There! Be Social and Share. In CHI 2019 Workshop on “Challenges Using Head-Mounted Displays in Shared and Social Spaces”, Glasgow, U.K., DOI: https://doi.org/10.3929/ethz-b-000331280

Paper Session: Audio Experiences(8th May, Wednesday – 14:00 – 15:20, Room: Alsh 1)

Kwok, T.C.K., Kiefer, P., Schinazi, V.R., Adams, B., and Raubal, M. (2019) Gaze-Guided Narratives: Adapting Audio Guide Content to Gaze in Virtual and Real Environments. In CHI Conference on Human Factors in Computing Systems Proceedings (CHI 2019), Maz 4-9, Glasgow, U.K. [PDF]

We are looking forward to seeing you in Glasgow!

These researches are part of the LAMETTA or IGAMaps projects.

Fabian Göbel has successfully completed his doctoral thesis on “Visual Attentive User Interfaces for Feature-Rich Environments”. The doctoral graduation has been approved by the Department conference in their last meeting. Congratulations, Fabian!

Fabian Göbel has successfully completed his doctoral thesis on “Visual Attentive User Interfaces for Feature-Rich Environments”. The doctoral graduation has been approved by the Department conference in their last meeting. Congratulations, Fabian!