PostDoc position in 3D Sketch Maps

In addition to the open PhD position, which has been announced here earlier, we’re also offering an open PostDoc position in the 3D Sketch Maps project.

In addition to the open PhD position, which has been announced here earlier, we’re also offering an open PostDoc position in the 3D Sketch Maps project.

We’re very much looking forward to the start of the 3D Sketch Maps project, for which we have now announced an open PhD position: Interaction with 3D Sketch Maps in Extended Reality.

The 3D Sketch Maps project, funded by the Swiss National Science Foundation in the scope of the Sinergia funding program, investigates 3D sketch maps from a theoretical, empirical, cognitive, as well as tool-related perspective, with a particular focus on Extended Reality (XR) technologies. Sketch mapping is an established research method in fields that study human spatial decision-making and information processing, such as navigation and wayfinding. Although space is naturally three-dimensional (3D), contemporary research has focused on assessing individuals’ spatial knowledge with two-dimensional (2D) sketches. For many domains though, such as aviation or the cognition of complex multilevel buildings, it is essential to study people’s 3D understanding of space, which is not possible with the current 2D methods. Eye tracking will be used for the analysis of people’s eye movements while using the sketch mapping tools.

The 4-year project will be carried out jointly by the Chair of Geoinformation Engineering, the Chair of Cognitive Science at ETH Zurich (Prof. Dr. Christoph Hölscher), and the Spatial Intelligence Lab at University of Münster (Prof. Dr. Angela Schwering).

Interested? Please check out the open PhD position on the ETH job board!

We’re glad that Adrian Sarbach has joined the geoGAZElab as a doctoral student on the project “The Expanded Flight Deck – Improving the Weather Situation Awareness of Pilots (EFDISA)“.

Adrian has, among others, studied at EPFL (Bachelor) and at ETH Zurich (Master), obtaining his degrees in electrical engineering. He wrote his MSc thesis in collaboration with Swiss International Air Lines, on the topic of tail assignment optimization.

Our accepted paper “Gaze-Adaptive Lenses for Feature-Rich Information Spaces” will be presented at ACM ETRA 2021:

May 25.2021 at 11:00 – 12:00 and 18:00 – 19:00 in “Posters & Demos & Videos”

May 26.2021 at 14:45 – 16.15 in Track 1: “Full Papers V”

Join the virtual conference for a chat!

https://etra.acm.org/2021/schedule.html

Kuno Kurzhals has left us for his new position as Junior Research Group Lead in the Cluster of Excellence Integrative Computational Design and Construction for Architecture (IntCDC) at the University of Stuttgart, Germany.

We wish him all the best and thank for the contributions he has made to the geoGAZElab during his PostDoc time!

We are excited that we receive funding from the Swiss Federal Office of Civil Aviation (BAZL) for a new project, starting in July 2021: “The Expanded Flight Deck – Improving the Weather Situation Awareness of Pilots (EFDISA)“. The project aims at improving contemporary pre-flight and in-flight representations of weather data for pilots. This will allow pilots to better perceive, understand, and anticipate meteorological hazards. The project will be done in close collaboration with industry partners and professional pilots (Swiss International Air Lines & Lufthansa Systems).

We are looking for a highly motivated doctoral student for this project. Applications are now open.

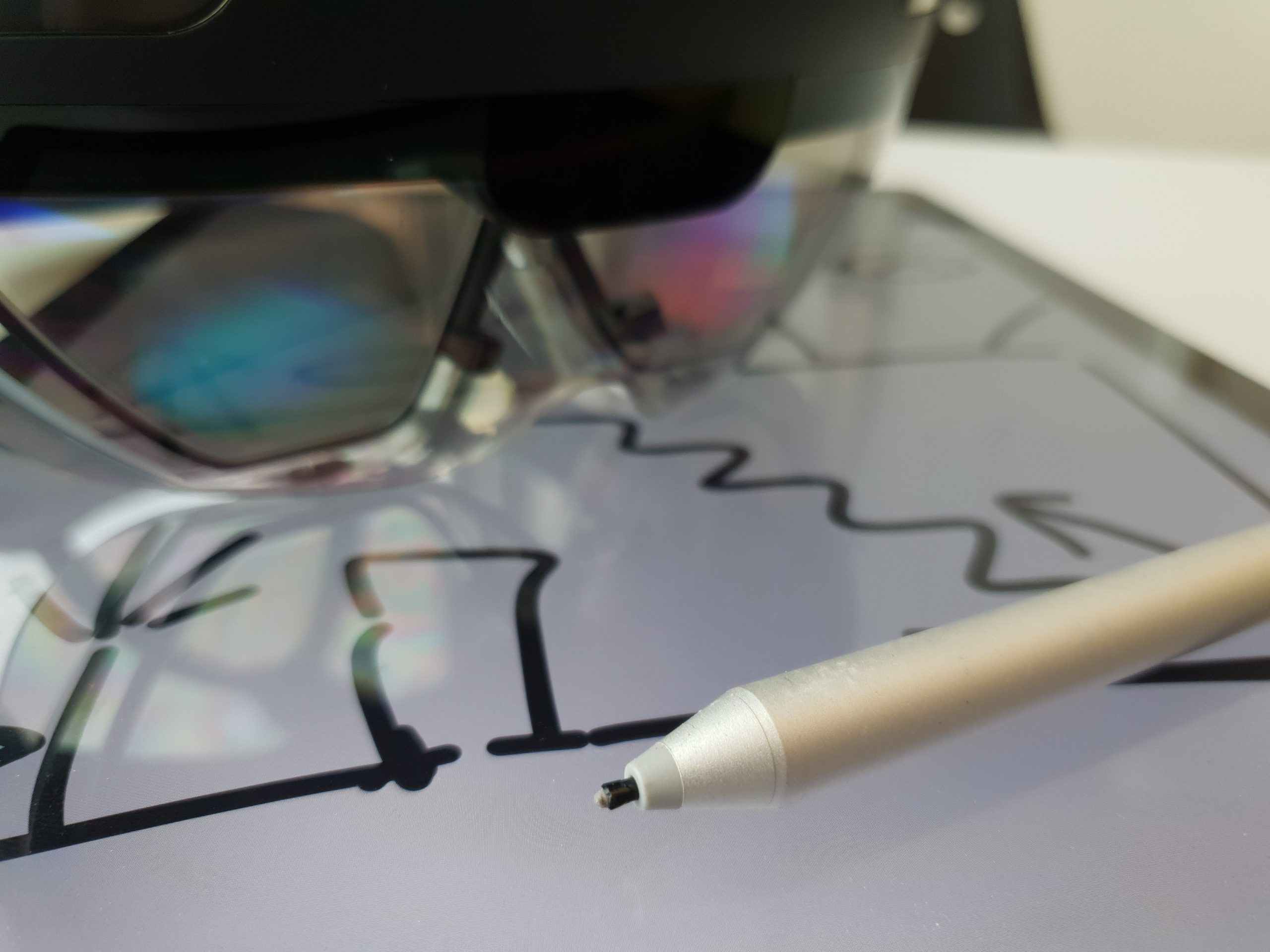

As an interdisciplinary project, the three Geomatics Master students Laura Schalbetter, Tianyu Wu and Xavier Brunner have developed an indoor navigation system for Microsoft HoloLens 2. The system was implemented using ESRI CityEngine, Unity, and Microsoft Visual Studio.

Check out their video:

After a Corona-caused delay in the hiring process, we’re excited to announce that Suvodip Chakraborty will start as a PhD student in our Singapore-based project on Communicating Predicted Disruptions in the scope of the Future Resilient Systems 2 research program. Suvodip will start in January 2021.

Suvodip holds a Master of Science from the Indian Institute of Technology Kharagpur. His Master thesis was titled “Design of Electro-oculography based wearable systems for eye movement analysis”.

As last year, we are involved in the organization of ETRA 2021, the ACM Symposium on Eye Tracking Research & Applications. Peter is again co-chairing the Demo&Video Track, for which the Call is now available online. The submission deadline is 2 February 2021.

Pilots not only have to make the right decisions, but they have to do it quickly and process a lot of information – especially visual information. In a unique project, ETH Zurich and Swiss International Air Lines have investigated what the eyes of pilots do in this process.

Martin Raubal, Professor of Geoinformation Engineering at ETH Zurich, appreciates the practical relevance of this research collaboration, which could contribute to increasing flight safety. Anyone who wants to develop it further should take off their blinders and think outside the box, says Christoph Ammann, captain and instructor at Swiss. And ETH Zurich is an ideal partner for this.

Watch the video on Vimeo!

We have revised and redesigned our website (http://geogaze.ethz.ch/), including a new logo. Have a look!

Kiefer, P., Adams, B., Kwok, T., Raubal, M. (2020) Modeling Gaze-Guided Narratives for Outdoor Tourism. In: McCrickard, S., Jones, M., and Stelter, T. (eds.): HCI Outdoors: Theory, Design, Methods and Applications. Springer International Publishing (in print)

We’ve been involved in the organization of two co-located events at this year’s ETRA conference: Eye Tracking for Spatial Research (ET4S) and Eye Tracking and Visualization (ETVIS). Even though ETRA and all co-located events had to be canceled, the review process was finished regularly, and accepted papers are now available in the ETRA Adjunct Proceedings in the ACM Digital Library.

Accepted ET4S papers are also linked from the ET4S website.

The instructor assistant system (iAssyst) that we developed as part of our research collaboration with Swiss International Air Lines is being featured in an article by innoFRAtor, the innovation portal of the Fraport AG.

You may read more about the system in our related research article: Rudi D., Kiefer P., and Raubal M. (2020). The Instructor Assistant System (iASSYST) – Utilizing Eye Tracking for Commercial Aviation Training Purposes. Ergonomics, vol. 63: no. 1, pp. 61-79, London: Taylor & Francis, 2020. DOI: https://doi.org/10.1080/00140139.2019.1685132

Our project on Enhanced flight training program for monitoring aircraft automation with Swiss International Air Lines, NASA, and the University of Oregon was officially concluded end of last year.

Our paper “Towards Pilot-Aware Cockpits” has been published in the proceedings of the 1st International Workshop on Eye-Tracking in Aviation (ETAVI 2020):

Lutnyk L., Rudi D., and Raubal M. (2020). Towards Pilot-Aware Cockpits. In Proceedings of the 1st International Workshop on Eye-Tracking in Aviation (ETAVI 2020), ETH Zurich. DOI: https://doi.org/10.3929/ethz-b-000407661

Abstract. Eye tracking has a longstanding history in aviation research. Amongst others it has been employed to bring pilots back “in the loop”, i.e., create a better awareness of the flight situation. Interestingly, there exists only little research in this context that evaluates the application of machine learning algorithms to model pilots’ understanding of the aircraft’s state and their situation awareness. Machine learning models could be trained to differentiate between normal and abnormal patterns with regard to pilots’ eye movements, control inputs, and data from other psychophysiological sensors, such as heart rate or blood pressure. Moreover, when the system recognizes an abnormal pattern, it could provide situation specific assistance to bring pilots back in the loop. This paper discusses when pilots benefit from such a pilot-aware system, and explores the technical and user oriented requirements for implementing this system.

Edit. The publication is part of PEGGASUS. This project has received funding from the Clean Sky 2 Joint Undertaking under the European Union’s Horizon 2020 research and innovation program under grant agreement No. 821461

The second phase of the FRS programme at the Singapore-ETH Centre officially started on April 1st with an online research kick-off meeting. It was launched in the midst of a global crisis – COVID-19, highlighting the need to better understand and foster resilience. Within FRS-II there is a particular emphasis on social resilience to enhance the understanding of how socio-technical systems perform before, during and after disruptions.

GeoGazeLab researchers will contribute within a research cluster focusing on distributed cognition (led by Martin Raubal). More specifically, we will develop a visualization, interaction, and notification framework for communicating predicted disruptions to stakeholders. Empirical studies utilizing eye tracking and gaze-based interaction methods will be part of this project, which is led by Martin Raubal and Peter Kiefer.

Our paper “Gaze-Adaptive Lenses for Feature-Rich Information Spaces” has been accepted at ACM ETRA 2020 as a full paper:

Göbel, F., Kurzhals K., Schinazi V. R., Kiefer, P., and Raubal, M. (2020). Gaze-Adaptive Lenses for Feature-Rich Information Spaces. In Proceedings of the 12th ACM Symposium on Eye Tracking Research & Applications (ETRA ’20), ACM. DOI: https://doi.org/10.1145/3379155.3391323

Our workshop contribution “Gaze-Aware Mixed-Reality: Addressing Privacy Issues with Eye Tracking” has been accepted at the “Workshop on Exploring Potentially Abusive Ethical, Social and Political Implications of Mixed Reality in HCI” at ACM CHI 2020:

Fabian Göbel, Kuno Kurzhals Martin Raubal and Victor R. Schinazi (2020). Gaze-Aware Mixed-Reality: Addressing Privacy Issues with Eye Tracking.

In CHI 2020 Workshop on Exploring Potentially Abusive Ethical, Social and Political Implications of Mixed Reality in HCI (CHI 2020), ACM.

The proceedings of the 1st International Workshop on Eye-Tracking in Aviation (ETAVI) 2020 have been published here.

We thank all program committee members for their efforts and great support, it is very much appreciated.

Furthermore, we thank all authors of the 15 excellent articles that were accepted for ETAVI 2020. We regret that the event had to be cancelled due to COVID-19.

Edit. Some of the organizers from ETH are part of the PEGGASUS project. This project has received funding from the Clean Sky 2 Joint Undertaking under the European Union’s Horizon 2020 research and innovation program under grant agreement No. 821461

Our paper “A View on the Viewer: Gaze-Adaptive Captions for Videos” has been accepted at ACM CHI 2020 as a full paper:

Kurzhals K., Göbel F., Angerbauer K., Sedlmair M., Raubal M. (2020) A View on the Viewer: Gaze-Adaptive Captions for Videos. In CHI Conference on Human Factors in Computing Systems Proceedings (CHI 2020), ACM (accepted)

Abstract. Subtitles play a crucial role in cross-lingual distribution of multimedia content and help communicate information where auditory content is not feasible (loud environments, hearing impairments, unknown languages). Established methods utilize text at the bottom of the screen, which may distract from the video. Alternative techniques place captions closer to related content (e.g., faces) but are not applicable to arbitrary videos such as documentations. Hence, we propose to leverage live gaze as indirect input method to adapt captions to individual viewing behavior. We implemented two gaze-adaptive methods and compared them in a user study (n=54) to traditional captions and audio-only videos. The results show that viewers with less experience with captions prefer our gaze-adaptive methods as they assist them in reading. Furthermore, gaze distributions resulting from our methods are closer to natural viewing behavior compared to the traditional approach. Based on these results, we provide design implications for gaze-adaptive captions.

The 5th edition of ET4S is taking place as a co-located event of ETRA 2020 in Stuttgart, Germany, between 2 and 5 June 2020.

The 1st Call for Papers is now available online.

On the 25th of November we presented the final results of our project entitled: “Enhanced flight training program for monitoring aircraft automation“.

The project was partially funded by the swiss Federal Office of Civil Aviation (BAZL), was lead by SWISS International Airlines Ltd., and supported by Prof. Dr. Robert Mauro from the Department of Psychology (University of Oregon) and Dr. Immanuel Barshi from NASA Ames Research Center, Human Systems Integration Division (NASA).

As part of this very successful project we developed a system that provides instructors with more detailed insights concerning pilots’ attention during training flights, to specifically improve instructors’ assessment of pilot situation awareness.

Our project has recently been featured in different media. ETH News has published a throrough article on our project: Tracking the eye of the pilot and different articles have been referencing this publication. Additionally, there have been two Radio interviews with Prof. Dr. Martin Raubal at Radio Zürisee and SRF4 (both in German).

Moreover, there have been publications during the course of the project:

Rudi, D., Kiefer, P. & Raubal, M. (2019). The Instructor Assistant System (iASSYST) – Utilizing Eye Tracking for Aviation Training Purposes. Ergonomics. https://doi.org/10.1080/00140139.2019.1685132.

Rudi, D., Kiefer, P., Giannopoulos, I., & Raubal, M. (2019). Gaze-based interactions in the cockpit of the future – a survey. Journal on Multimodal User Interfaces. Springer. Retrieve from https://link.springer.com/article/10.1007/s12193-019-00309-8.

Rudi D., Kiefer P. & Raubal, M. (2018). Visualizing Pilot Eye Movements for Flight Instructors. In ETVIS ’18: 3rd Workshop on Eye Tracking and Visualization. ACM, New York, NY, USA, Article 7, 5 pages. http://doi.acm.org/10.1145/3205929.3205934. Best Paper Award Winner.

Our paper “The instructor assistant system (iASSYST) – utilizing eye tracking for commercial aviation training purposes” has been published in the Ergonomics Journal:

Rudi, D., Kiefer, P., & Raubal, M. (2019). The instructor assistant system (iASSYST)-utilizing eye tracking for commercial aviation training purposes. Ergonomics.

Abstract. This work investigates the potential of providing commercial aviation flight instructors with an eye tracking enhanced observation system to support the training process. During training, instructors must deal with many parallel tasks, such as operating the flight simulator, acting as air traffic controllers, observing the pilots and taking notes. This can cause instructors to miss relevant information that is crucial for debriefing the pilots. To support instructors, the instructor ASsistant SYSTem (iASSYST) was developed. It includes video, audio, simulator and eye tracking recordings. iASSYST was evaluated in a study involving 7 instructors. The results show that with iASSYST, instructors were able to support their observations of errors, find new errors, determine that some previously identified errors were not errors, and to reclassify the types of errors that they had originally identified. Instructors agreed that eye tracking can help identifying causes of pilot error.

We are again involved in the organization of ETRA 2020, the ACM Symposium on Eye Tracking Research & Applications, taking place in Stuttgart in June 2020.

As one of our activities at ETRA 2020, Peter is co-chairing the Demo&Video Track. The Call for Demos&Videos is now online and open for submissions.

As part of our involvement in the upcoming Future Resilient Systems II research programme, we are looking for a PhD candidate working on the development of a visualization, interaction and notification framework for communicating disruptions predicted from weak signals.

Employment will be at the Singapore-ETH Centre, workplace Singapore.

More details and application here.

We’re very happy to welcome Victor Schinazi as a new team member! He’ll be working with us for 4 months before joining the faculty of Psychology at Bond University in Australia.

David Rudi has successfully defended his doctoral thesis on 16 September (“Enhancing Spatial Awareness of Pilots in Commercial Aviation”). We cordially congratulate, and are happy that he’ll stay with us as a PostDoc starting from November!

David Rudi has successfully defended his doctoral thesis on 16 September (“Enhancing Spatial Awareness of Pilots in Commercial Aviation”). We cordially congratulate, and are happy that he’ll stay with us as a PostDoc starting from November!

We are glad to announce an invited talk by Sophie Stellmach on Eye Tracking and Mixed Reality as part of the VERA Geomatics Seminar.

Dr. Sophie Stellmach is a Senior Scientist at Microsoft where she explores entirely new ways to engage with and blend our virtual and physical realities in products such as Microsoft HoloLens. Being an avid eye tracking researcher for over a decade, she was heavily involved in the development of gaze-based interaction with HoloLens 2.

The talk will take place as part of the VERA Geomatics Seminar on Thursday, 10th October 2019, 5:00 p.m. at ETH Hönggerberg, HIL D 53.

Title: Multimodal Gaze-supported Input in Mixed Reality and its Promises for Spatial Research

On 11 September, Prof. Dr. Detlef Günther, the Vice President for Research and Corporate Relations of ETH Zurich, has visited the D-BAUG department and informed himself about the exciting research activities of the different institutes.

Our institute was represented by Peter Kiefer, who summarized the research of the GeoGazeLab. The slides provide an overview on our research interests and current projects.

Edit. The presentation includes the PEGGASUS project. This project has received funding from the Clean Sky 2 Joint Undertaking under the European Union’s Horizon 2020 research and innovation program under grant agreement No. 821461

A quick reminder for the “1st International Workshop on Eye-Tracking in Aviation (ETAVI)” that is going to take place March 2019 in Toulouse, France.

The submission deadlines are:

Feel free to also forward the Call for Papers to any interested colleagues.

We look forward to seeing you there!

Edit. Some of the organizers from ETH are part of the PEGGASUS project. This project has received funding from the Clean Sky 2 Joint Undertaking under the European Union’s Horizon 2020 research and innovation program under grant agreement No. 821461